What you need to know

First off, I assume you have some familiarity with C#, or coding in general. Secondly it is advised to at least have programmed in a shader language before. Used in this tutorial is HLSL. The rest is what this tutorial is for!

The final result

You can download the code example here (or you can scroll down to find some explanations). The final result will look something like this:

Normal maps

Normal maps are textures to containing a color indicating the normal of a certain pixel. How does this work? Take the normal map used in the code example alongside the original texture:

The left texture contains the normal colors, and on the right is the generated color defining the normal. To calculate the actual normal vector, we have to apply a little transformation:

$$normal = 2.0 * pixelcolor - 1.0$$

To explain this better, we take the most common color in the picture, a light blue-ish color. In RGB values it is about $((128, 128, 255)$), which is reduced in the range of [0,1]as $((0.5, 0.5, 1.0)$). After applying our transformation the value becomes $((0.0, 0.0, 1.0)$) which is a normal pointing in the Z direction.

In our 2D game this value will point from screen towards the viewer. As you can see in the normal image, there are several red and green colored portions, which will affect the direction of the normal. With this information we can add fake depth to a plain 2D texture!

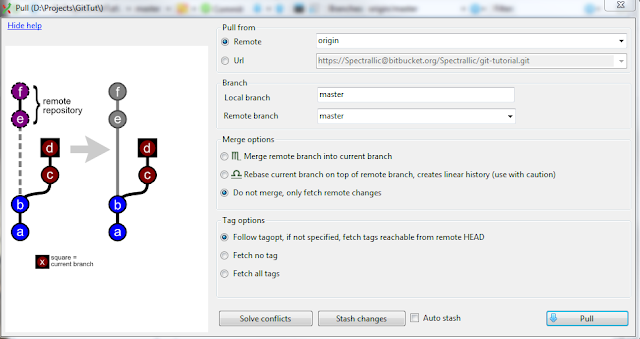

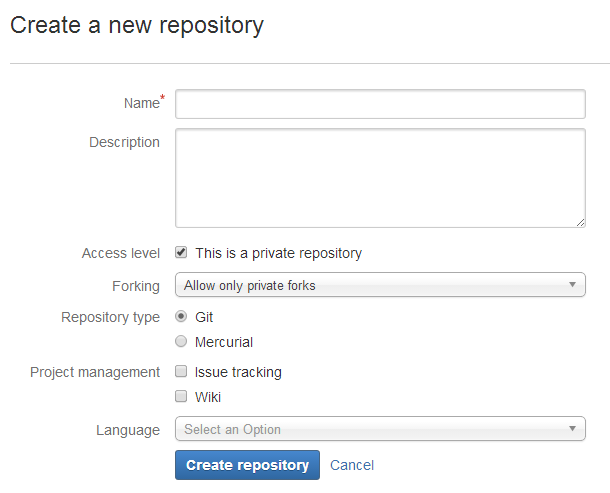

The drawing setup

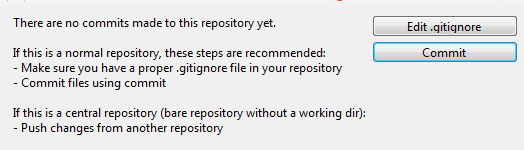

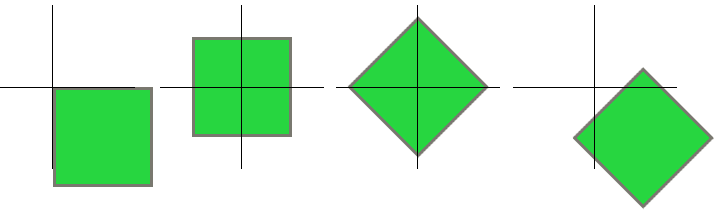

To pass this information to our lighting effect we need to have this normal map ready, which means we can't draw everything on the screen immediately. The setup used for this comes close to Deferred Shading. We only need the color and normal buffer, since the depth buffer is worthless here.

All the normal sprites are drawn first, as you're used to, except, this time we save them to a rendertarget. After which I draw all of the normals to another rendertarget. Unlike standard deferred shading, I chose to render the lights to a seperate rendertarget here. If you wish, you can combine drawing the textures and drawing the lights to a single pass. This was merely done to show the different rendering steps here.

The actual lighting magic

Because it's a 2D game, you expect to need to draw a "lighting texture", something like this:

But we don't need to! Since we have a normal, we can simply apply the technique to draw a light in 3D, which is really fancy and easy to create. The effect I used to create this tutorial with is called Diffuse Reflection, or Lambertian Reflectance. We set up a point light (which is, a point from which light emanates) and calculate the pixel color on the GPU.

Diffuse reflection requires three things: the position of the light, the position of the current pixel being shaded, and the normal at that position. From the first two you can calculate the light direction, and by looking at the value of the dot product from the light direction and the normal you can determine the lighting coefficient.

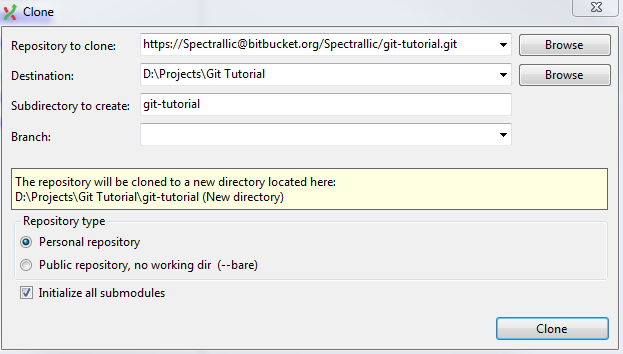

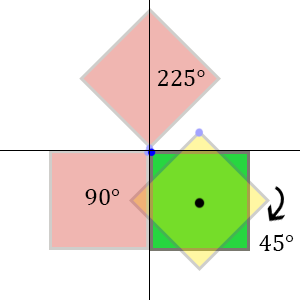

Sometimes you will want to rotate the normal retrieved from the normal map. This is done by creating a separate rotation matrix and adding it to the shader. More information about creating such a matrix can be found in my other tutorial series on rotations.

Finding the correct normal on the pixel position is rather easy: we have a full screen buffer of normals, and a position given by the draw call. Dividing this position by the screen size, we have the texture coordinates of the normal pixel ranging in [0,1]. Exactly what we need!

Code example

All of this code can be found in the source code, I'd like to point out a few things in this article though, here's the code used for lighting in HLSL:

// Basic XNA Vertex shader

float4x4 MatrixTransform;

void SpriteVertexShader(inout float4 color : COLOR0,

inout float2 texCoord : TEXCOORD0,

inout float4 position : SV_Position)

{

position = mul(position, MatrixTransform);

}

float4 PixelShaderFunction(float2 position : SV_POSITION,

float4 color : COLOR0,

float2 TexCoordsUV : TEXCOORD0) : COLOR0

{

// Obtain texture coordinates corresponding to the current pixel on screen

float2 TexCoords = position.xy / screenSize;

TexCoords += 0.5f / screenSize;

// Sample the input texture

float4 normal = 2.0f * tex2D(NormalSampler, TexCoords) - 1.0f;

// Transform input position to view space

float3 newPos = float3(position.xy, 0.0f);

float4 pos = mul(newPos, InverseVP);

// Calculate the lighting with given normal and position

float4 lighting = CalculateLight(pos.xyz, normal.xyz);

return lighting;

}

// Calculates diffuse light with attenuation and normal dot light

float4 CalculateLight(float3 pos, float3 normal)

{

float3 lightDir = LightPosition - pos;

float attenuation = saturate(1.0f - length(lightDir) / LightRadius);

lightDir = normalize(lightDir);

float NdL = max(0, dot(normal, lightDir));

float4 diffuseLight = NdL * LightColor * LightIntensity * attenuation;

return float4(diffuseLight.rgb, 1.0f);

}

As you can see, the shader consists of a vertex and pixel shader. The vertex shader simply passes on the color, texture coordinates and position. It only transforms the position with the given matrix. After the vertex shader we know the rectangle on the screen and the pixel shader will analyze all the pixel inside of it.

What you first see in the pixel shader is getting the texture coordinates from the position. This is done by dividing it through the screen size and adding half a pixel width (so we're in the center of the pixel). With this coordinate we can sample the normal map to get the color of the normal, and as shown in this article, calculate the actual normal from it. What happens next is retrieving the original position, by multiplying it with the inverse view-projection matrix. We can now calculate the lighting with given parameters.

Where the light calculation method is nothing more than a normal times lightdirection to see if the surface should get lit. Of course, not to forget, the attenuation which looks at the range of the light and caps it (smoothly) by multiplying it with this value.

Optimization

You want to draw a lot of lights, right? Normal deferred shading can't handle a lot of point lights, since you have to redraw the whole screen for every pointlight. Thus follows the first optimization: if we only draw a small square on the screen were we expect the light to shine, we don't draw the rest of the screen. This is done quite simple by adding a light radius, from which we can create a rectangle to draw in spritebatch.

Since we don't need to draw any textures, and we still have to make a draw call, I found the following optimization: in spritebatch you have to supply a texture for a draw call, the best way to use our previous optimization is drawing a pixel and upsize it to the square. This way, the pixel shader can sample the normal map and output the lighting on the positions given by the draw call. In the code I just pass the normal map as texture for simplicity.

I hope you learned something from this tutorial, and sure hope to see some awesome games created with this effect!